Second try with Kubernetes

Last time, I got completely lost in all the different entities managed by Kubernetes. See http://blog.wafrat.com/kubernetes-is-hard/. Each entity refers to others by labels and selectors.

Let's take it slow and get it right.

Labels and Selectors

Labels

You can assign labels to any resource. Later, you can search/select those resources by using those same labels. See an example at https://kubernetes.io/docs/concepts/overview/working-with-objects/labels/#syntax-and-character-set.

Selectors

- Services target pods with the selector key/value. Source: https://kubernetes.io/docs/concepts/overview/working-with-objects/labels/#service-and-replicationcontroller

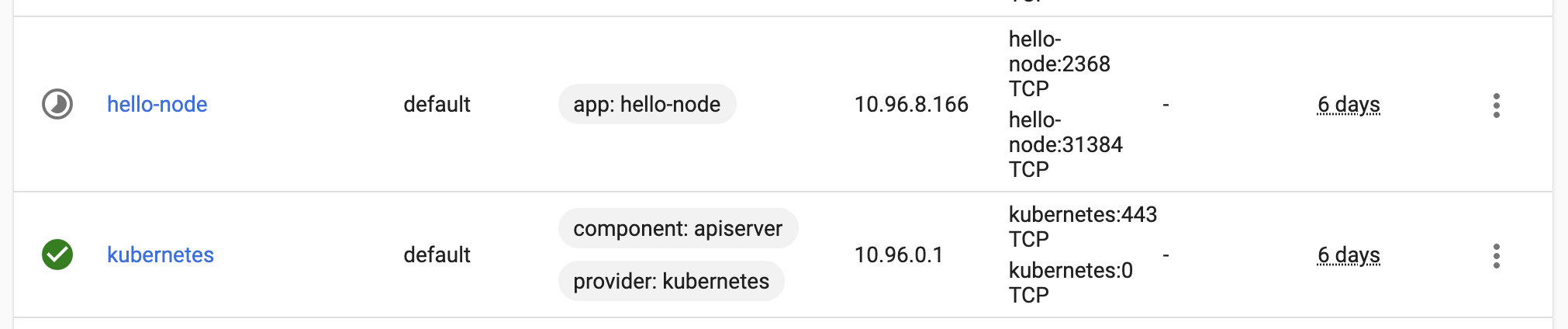

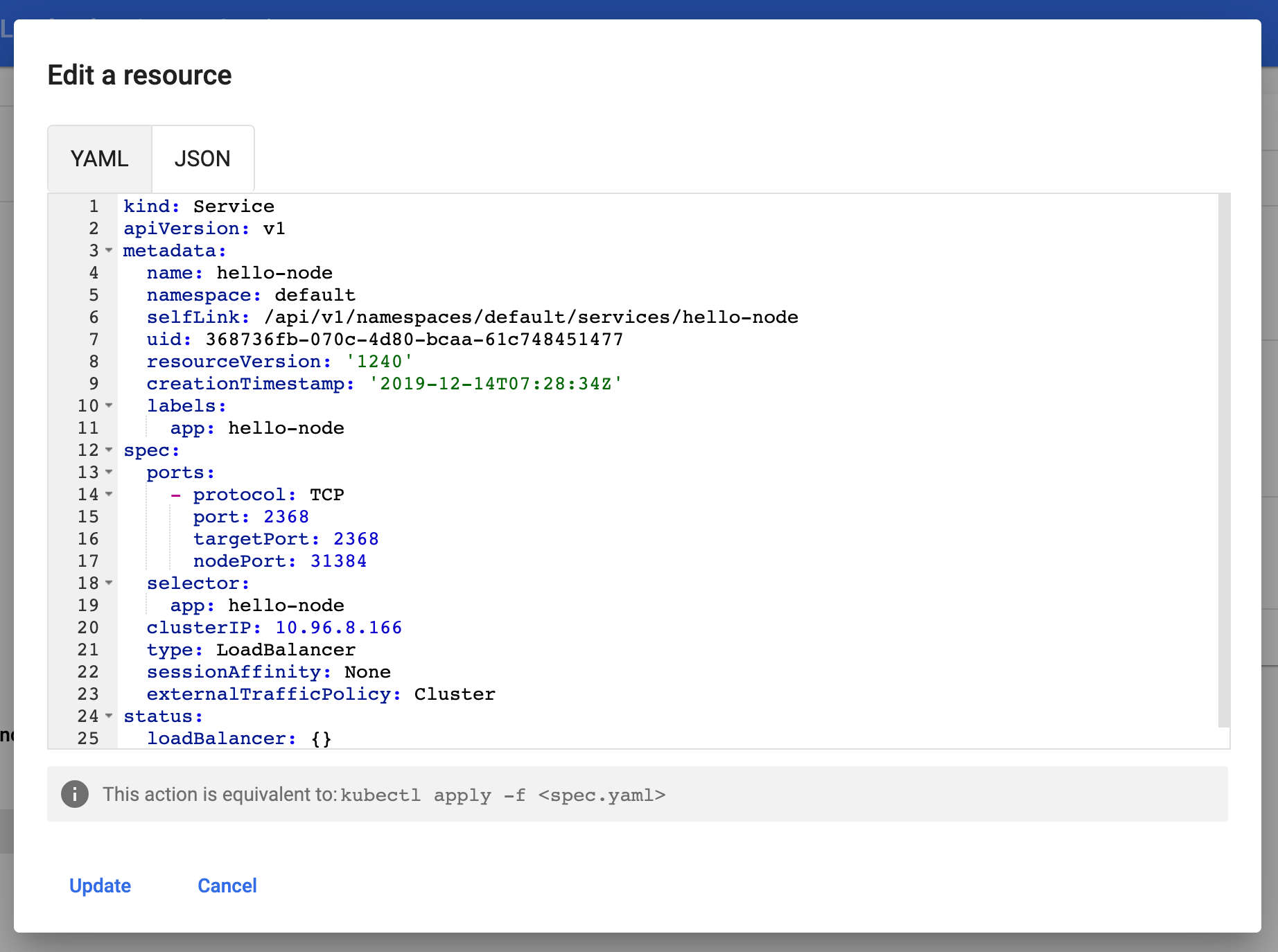

Commands vs config files

It is possible to create entities using commands or yaml files. Either way you use, you can later list out entities in the command line or in the minikube dashboard. In the minikube dashboard, you can easily delete entities and find the equivalent yaml:

Running ghost with persistent storage

Exposing the port

While the tutorial I had previously used taught how to expose a Deployment, I wanted to start at the Pod level, since the Pod is the most basic entity in Kubernetes.

Here's the yaml for my pod:

apiVersion: v1

kind: Pod

metadata:

name: ghost-pod

spec:

volumes:

- name: task-pv-storage

persistentVolumeClaim:

claimName: task-pv-claim

containers:

- name: task-pv-container

image: ghost

ports:

- containerPort: 2368

name: "ghost-server"

volumeMounts:

- mountPath: "/var/lib/ghost/content"

name: task-pv-storage

It relies on task-pv-storage and task-pv-claim which I had set up last time to map to a local folder on my laptop.

Then it turns out you can use the kubectl to expose a Pod (https://kubernetes.io/docs/reference/generated/kubectl/kubectl-commands#expose). The previous tutorial showed the command to expose a deployment. This one creates a LoadBalancer service that exposes the pod:

% kubectl expose pod ghost-pod --port=2368 --type=LoadBalancer

service/ghost-pod exposed

% minikube service ghost-pod

|-----------|-----------|-------------|---------------------------|

| NAMESPACE | NAME | TARGET PORT | URL |

|-----------|-----------|-------------|---------------------------|

| default | ghost-pod | | http://192.168.64.2:31422 |

|-----------|-----------|-------------|---------------------------|

🎉 Opening service default/ghost-pod in default browser...

It worked! I can see the Ghost page in my browser.

This is the yaml of the generated service:

kind: Service

apiVersion: v1

metadata:

name: ghost-pod

namespace: default

selfLink: /api/v1/namespaces/default/services/ghost-pod

uid: bd4f6995-f3cc-4762-a98d-f60174ae664d

resourceVersion: '20159'

creationTimestamp: '2019-12-20T07:34:18Z'

labels:

app: my-ghost-pod

spec:

ports:

- protocol: TCP

port: 2368

targetPort: 2368

nodePort: 31422

selector:

app: my-ghost-pod

clusterIP: 10.96.74.165

type: LoadBalancer

sessionAffinity: None

externalTrafficPolicy: Cluster

status:

loadBalancer: {}

More questions

- Does the data really persist in my folder thanks to my claim?

- Can I do the same by using another type of Service?

To answer the question on persistence, I deleted the node in the dashboard. At this point, refreshing the browser page fails. Then I I recreated it by applying the same yaml file:

% kubectl apply -f ghost_pod.yaml

pod/ghost-pod created

After a few seconds, the page comes up again. The blog post I had created is still there! However the strange thing is, the folder I had used to set up the volume claim is empty. When I stop and restart minikube, the data is gone. Alright, something is wrong with the volume claim...

... Found it. After re-reading https://kubernetes.io/docs/tasks/configure-pod-container/configure-persistent-volume-storage/#create-an-index-html-file-on-your-node. The claim is done on a folder in the minikube Node, not on my laptop. If i connect to the minikube node with minikube ssh, I can find the folder and indeed the Ghost files are there!

If I minikube stop then minikube start, it's a new VM. Expectedly, the data is gone. https://minikube.sigs.k8s.io/docs/reference/persistent_volumes/ says that whatever is in /data will still persist. Yay.